55 int nv, dir, beg_dir, end_dir;

58 static double ***

T, ***C_dt[

NVAR], **dcoeff;

63 #if DIMENSIONAL_SPLITTING == YES

64 beg_dir = end_dir =

g_dir;

78 #if (PARABOLIC_FLUX & EXPLICIT)

93 #if DIMENSIONAL_SPLITTING == NO

94 if (C_dt[

RHO] == NULL){

102 memset ((

void *)C_dt[nv][k][j],

'\0',

NX1_TOT*

sizeof(

double));

111 #if (RESISTIVITY == EXPLICIT) && (defined STAGGERED_MHD)

119 #if THERMAL_CONDUCTION == EXPLICIT

121 TOT_LOOP(k,j,i) T[k][j][i] = d->Vc[PRS][k][j][i]/d->Vc[

RHO][k][j][i];

128 for (dir = beg_dir; dir <= end_dir; dir++){

134 #if (RESISTIVITY == EXPLICIT) && !(defined STAGGERED_MHD)

140 for ((*ip) = 0; (*ip) < indx.

ntot; (*ip)++) {

141 VAR_LOOP(nv) state.v[(*ip)][nv] = d->Vc[nv][k][j][i];

142 state.flag[*ip] = d->flag[k][j][i];

149 Riemann (&state, indx.

beg - 1, indx.

end, Dts->

cmax, grid);

153 #if (PARABOLIC_FLUX & EXPLICIT)

156 #if UPDATE_VECTOR_POTENTIAL == YES

167 for ((*ip) = indx.

beg; (*ip) <= indx.

end; (*ip)++) {

168 VAR_LOOP(nv) UU[nv][k][j][i] += state.rhs[*ip][nv];

172 for ((*ip) = indx.

beg; (*ip) <= indx.

end; (*ip)++) {

173 VAR_LOOP(nv) UU[k][j][i][nv] += state.rhs[*ip][nv];

182 for ((*ip) = indx.

beg; (*ip) <= indx.

end; (*ip)++) {

183 #if DIMENSIONAL_SPLITTING == NO

186 C_dt[0][

k][

j][

i] += 0.5*( Dts->

cmax[(*ip)-1]

187 + Dts->

cmax[*ip])*inv_dl[*ip];

189 #if (PARABOLIC_FLUX & EXPLICIT)

190 dl2 = 0.5*inv_dl[*ip]*inv_dl[*ip];

192 C_dt[nv][

k][

j][

i] += (dcoeff[*ip][nv]+dcoeff[(*ip)-1][nv])*dl2;

196 #elif DIMENSIONAL_SPLITTING == YES

201 #if (PARABOLIC_FLUX & EXPLICIT)

202 dl2 = inv_dl[*ip]*inv_dl[*ip];

216 #if (ENTROPY_SWITCH) && (RESISTIVITY == EXPLICIT)

228 #if DIMENSIONAL_SPLITTING == YES

244 #if (PARABOLIC_FLUX & EXPLICIT)

254 #if (PARABOLIC_FLUX & EXPLICIT)

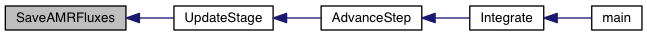

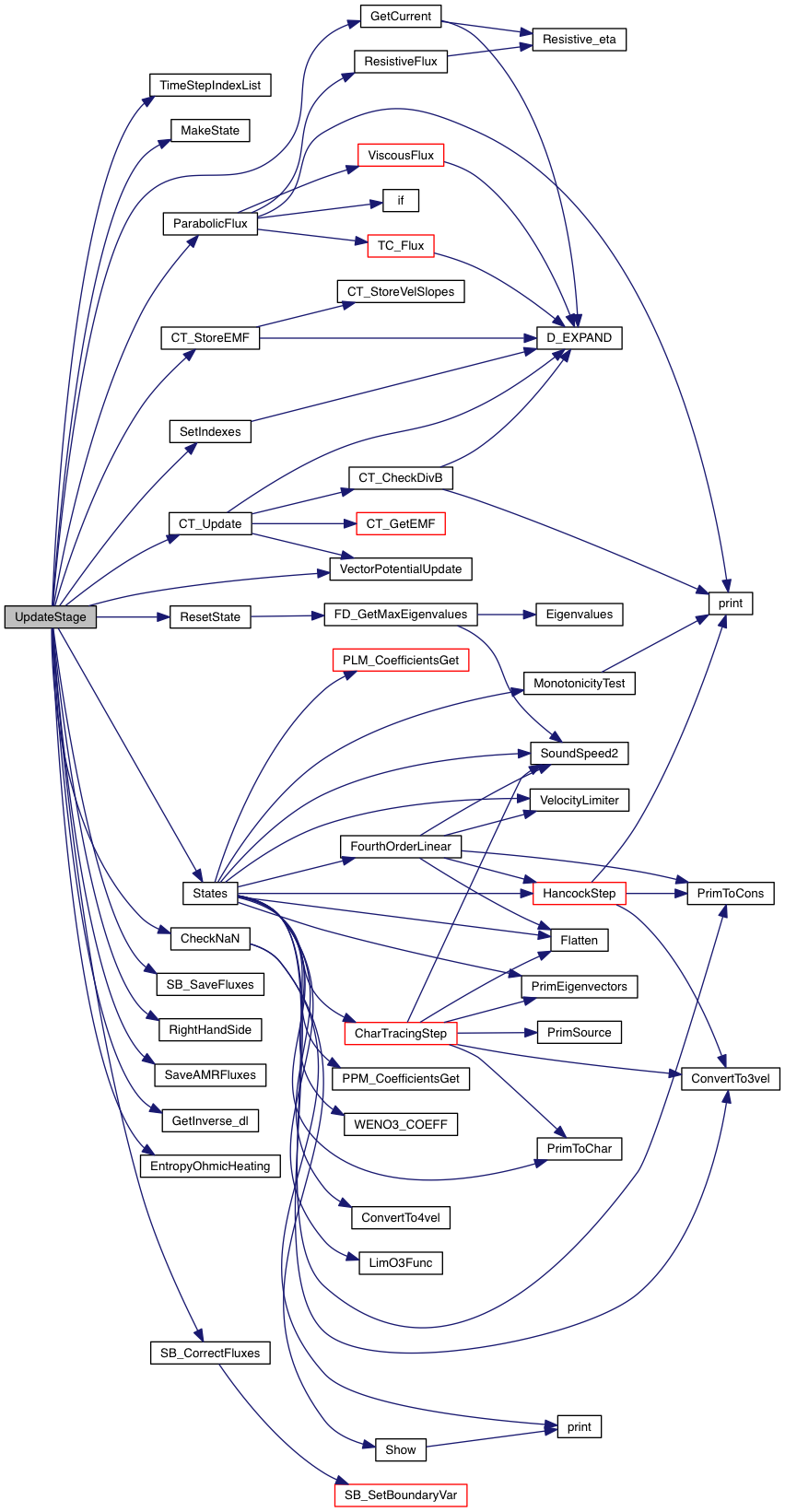

static void SaveAMRFluxes(const State_1D *, double **, int, int, Grid *)

void CT_StoreEMF(const State_1D *state, int beg, int end, Grid *grid)

double ** v

Cell-centered primitive varables at the base time level, v[i] = .

double **** Vs

The main four-index data array used for face-centered staggered magnetic fields.

void ResetState(const Data *, State_1D *, Grid *)

int end

Global end index for the local array.

void EntropyOhmicHeating(const Data *, Data_Arr, double, Grid *)

double **** J

Electric current defined as curl(B).

void GetCurrent(const Data *d, int dir, Grid *grid)

void States(const State_1D *, int, int, Grid *)

int g_intStage

Gives the current integration stage of the time stepping method (predictor = 0, 1st corrector = 1...

double **** Vc

The main four-index data array used for cell-centered primitive variables.

double * GetInverse_dl(const Grid *)

void SB_CorrectFluxes(Data_Arr U, double t, double dt, Grid *grid)

void CT_Update(const Data *d, Data_Arr Bs, double dt, Grid *grid)

#define ARRAY_3D(nx, ny, nz, type)

double * cmax

Maximum signal velocity for hyperbolic eqns.

#define FOR_EACH(nv, beg, list)

void MakeState(State_1D *)

double inv_dtp

Inverse of diffusion (parabolic) time step .

double inv_dta

Inverse of advection (hyperbolic) time step, .

int g_i

x1 grid index when sweeping along the x2 or x3 direction.

int CheckNaN(double **, int, int, int)

#define TOT_LOOP(k, j, i)

int g_dir

Specifies the current sweep or direction of integration.

int beg

Global start index for the local array.

int g_j

x2 grid index when sweeping along the x1 or x3 direction.

long int IEND

Upper grid index of the computational domain in the the X1 direction for the local processor...

void SetIndexes(Index *indx, Grid *grid)

void RightHandSide(const State_1D *state, Time_Step *Dts, int beg, int end, double dt, Grid *grid)

int g_k

x3 grid index when sweeping along the x1 or x2 direction.

long int NMAX_POINT

Maximum number of points among the three directions, boundaries excluded.

double * bn

Face magentic field, bn = bx(i+1/2)

void SB_SaveFluxes(State_1D *state, Grid *grid)

void ParabolicFlux(Data_Arr V, Data_Arr J, double ***T, const State_1D *state, double **dcoeff, int beg, int end, Grid *grid)

long int KBEG

Lower grid index of the computational domain in the the X3 direction for the local processor...

#define ARRAY_2D(nx, ny, type)

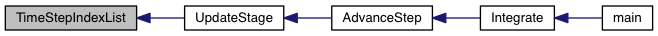

static intList TimeStepIndexList()

long int KEND

Upper grid index of the computational domain in the the X3 direction for the local processor...

void VectorPotentialUpdate(const Data *d, const void *vp, const State_1D *state, const Grid *grid)

long int JBEG

Lower grid index of the computational domain in the the X2 direction for the local processor...

long int JEND

Upper grid index of the computational domain in the the X2 direction for the local processor...

#define TRANSVERSE_LOOP(indx, ip, i, j, k)

long int NX1_TOT

Total number of zones in the X1 direction (boundaries included) for the local processor.

long int IBEG

Lower grid index of the computational domain in the the X1 direction for the local processor...

![\[ U \quad\Longrightarrow \quad U + \Delta t R(V) \]](form_203.png)