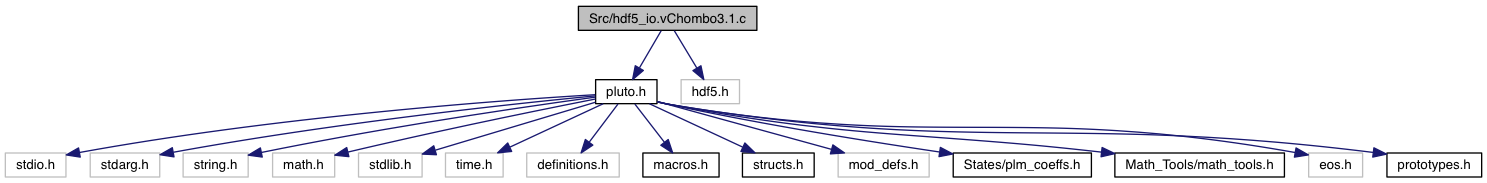

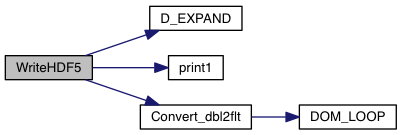

Write data to disk using hdf5 format in single or double precision.

56 hid_t dataspace, memspace, dataset;

57 hid_t strspace, stratt, string_type;

58 hid_t file_identifier, group;

59 hid_t file_access = 0;

61 hid_t plist_id_mpiio = 0;

72 static float ****node_coords, ****cell_coords;

73 char filename[512], filenamexmf[512];

74 char *coords =

"/cell_coords/X /cell_coords/Y /cell_coords/Z ";

75 char *cname[] = {

"X",

"Y",

"Z"};

77 int ierr, rank, nd, nv, ns, nc, ngh, ii, jj, kk;

78 int n1p, n2p, n3p, nprec;

86 if (node_coords == NULL) {

93 node_coords =

ARRAY_4D(3, n3p, n2p, n1p,

float);

96 for (kk = 0; kk < n3p; kk++) { x3 = grid[

KDIR].

xl[

KBEG+kk];

97 for (jj = 0; jj < n2p; jj++) { x2 = grid[

JDIR].

xl[

JBEG+jj];

98 for (ii = 0; ii < n1p; ii++) { x1 = grid[

IDIR].

xl[

IBEG+ii];

100 node_coords[

JDIR][kk][jj][ii] = 0.0;

101 node_coords[

KDIR][kk][jj][ii] = 0.0;

103 #if GEOMETRY == CARTESIAN || GEOMETRY == CYLINDRICAL

105 node_coords[

JDIR][kk][jj][ii] = (float)x2; ,

106 node_coords[

KDIR][kk][jj][ii] = (float)x3;)

108 D_EXPAND(node_coords[

IDIR][kk][jj][ii] = (

float)(x1*cos(x2)); ,

109 node_coords[

JDIR][kk][jj][ii] = (float)(x1*sin(x2)); ,

110 node_coords[

KDIR][kk][jj][ii] = (float)(x3);)

113 D_EXPAND(node_coords[

IDIR][kk][jj][ii] = (

float)(x1*sin(x2)); ,

114 node_coords[

JDIR][kk][jj][ii] = (float)(x1*cos(x2)); ,

115 node_coords[

KDIR][kk][jj][ii] = 0.0;)

117 D_EXPAND(node_coords[

IDIR][kk][jj][ii] = (

float)(x1*sin(x2)*cos(x3)); ,

118 node_coords[

JDIR][kk][jj][ii] = (float)(x1*sin(x2)*sin(x3)); ,

119 node_coords[

KDIR][kk][jj][ii] = (float)(x1*cos(x2));)

122 print1 (

"! HDF5_IO: Unknown geometry\n");

127 for (kk = 0; kk <

NX3; kk++) { x3 = grid[

KDIR].

x[

KBEG+kk];

128 for (jj = 0; jj <

NX2; jj++) { x2 = grid[

JDIR].

x[

JBEG+jj];

129 for (ii = 0; ii <

NX1; ii++) { x1 = grid[

IDIR].

x[

IBEG+ii];

131 cell_coords[

JDIR][kk][jj][ii] = 0.0;

132 cell_coords[

KDIR][kk][jj][ii] = 0.0;

134 #if GEOMETRY == CARTESIAN || GEOMETRY == CYLINDRICAL

136 cell_coords[

JDIR][kk][jj][ii] = (float)x2; ,

137 cell_coords[

KDIR][kk][jj][ii] = (float)x3;)

139 D_EXPAND(cell_coords[

IDIR][kk][jj][ii] = (

float)(x1*cos(x2)); ,

140 cell_coords[

JDIR][kk][jj][ii] = (float)(x1*sin(x2)); ,

141 cell_coords[

KDIR][kk][jj][ii] = (float)(x3);)

144 D_EXPAND(cell_coords[

IDIR][kk][jj][ii] = (

float)(x1*sin(x2)); ,

145 cell_coords[

JDIR][kk][jj][ii] = (float)(x1*cos(x2)); ,

146 cell_coords[

KDIR][kk][jj][ii] = 0.0;)

148 D_EXPAND(cell_coords[

IDIR][kk][jj][ii] = (

float)(x1*sin(x2)*cos(x3)); ,

149 cell_coords[

JDIR][kk][jj][ii] = (float)(x1*sin(x2)*sin(x3)); ,

150 cell_coords[

KDIR][kk][jj][ii] = (float)(x1*cos(x2));)

153 print1 (

"! HDF5_IO: Unknown geometry\n");

165 for (nd = 0; nd <

DIMENSIONS; nd++) wgrid[nd] = grid + DIMENSIONS - nd - 1;

168 MPI_Barrier (MPI_COMM_WORLD);

169 if (

prank == 0)time(&tbeg);

172 sprintf (filename,

"%s/data.%04d.%s", output->

dir,output->

nfile, output->

ext);

178 file_access = H5Pcreate(H5P_FILE_ACCESS);

180 H5Pset_fapl_mpiposix(file_access, MPI_COMM_WORLD, 1);

182 H5Pset_fapl_mpio(file_access, MPI_COMM_WORLD, MPI_INFO_NULL);

185 file_access = H5P_DEFAULT;

188 file_identifier = H5Fcreate(filename, H5F_ACC_TRUNC, H5P_DEFAULT, file_access);

190 ierr = H5Pclose(file_access);

192 group = H5Gcreate(file_identifier,

"vars", 0);

196 strspace = H5Screate_simple(1, &dimstr, NULL);

197 string_type = H5Tcopy(H5T_C_S1);

198 H5Tset_size(string_type, strlen(

"/cell_coords/X "));

199 H5Tset_strpad(string_type,H5T_STR_SPACEPAD);

200 stratt = H5Acreate(group,

"coords",string_type,strspace, H5P_DEFAULT);

201 err = H5Awrite(stratt, string_type, coords);

205 for (nd = 0; nd <

DIMENSIONS; nd++) dimens[nd] = wgrid[nd]->np_int_glob;

207 dataspace = H5Screate_simple(rank, dimens, NULL);

211 start[nd] = wgrid[nd]->

beg - wgrid[nd]->

nghost;

213 count[nd] = wgrid[nd]->

np_int;

216 err = H5Sselect_hyperslab(dataspace, H5S_SELECT_SET, start, stride, count, NULL);

219 for (nd = 0; nd <

DIMENSIONS; nd++) dimens[nd] = wgrid[nd]->np_tot;

221 memspace = H5Screate_simple(rank,dimens,NULL);

224 start[nd] = wgrid[nd]->

nghost;

226 count[nd] = wgrid[nd]->

np_int;

228 err = H5Sselect_hyperslab(memspace,H5S_SELECT_SET, start, stride, count, NULL);

234 for (nv = 0; nv < output->

nvar; nv++) {

241 dataset = H5Dcreate(group, output->

var_name[nv], H5T_NATIVE_DOUBLE,

242 dataspace, H5P_DEFAULT);

244 plist_id_mpiio = H5Pcreate(H5P_DATASET_XFER);

245 H5Pset_dxpl_mpio(plist_id_mpiio,H5FD_MPIO_COLLECTIVE);

246 err = H5Dwrite(dataset, H5T_NATIVE_DOUBLE, memspace, dataspace,

247 plist_id_mpiio, output->

V[nv][0][0]);

249 err = H5Dwrite(dataset, H5T_NATIVE_DOUBLE, memspace, dataspace,

250 H5P_DEFAULT, output->

V[nv][0][0]);

256 dataset = H5Dcreate(group, output->

var_name[nv], H5T_NATIVE_FLOAT,

257 dataspace, H5P_DEFAULT);

260 plist_id_mpiio = H5Pcreate(H5P_DATASET_XFER);

261 H5Pset_dxpl_mpio(plist_id_mpiio,H5FD_MPIO_COLLECTIVE);

262 err = H5Dwrite(dataset, H5T_NATIVE_FLOAT, memspace,

263 dataspace, plist_id_mpiio, Vpt);

265 err = H5Dwrite(dataset, H5T_NATIVE_FLOAT, memspace,

266 dataspace, H5P_DEFAULT, Vpt);

271 H5Pclose(plist_id_mpiio);

278 group = H5Gcreate(file_identifier,

"cell_coords", 0);

280 for (nd = 0; nd <

DIMENSIONS; nd++) dimens[nd] = wgrid[nd]->np_int_glob;

281 dataspace = H5Screate_simple(rank, dimens, NULL);

285 start[nd] = wgrid[nd]->

beg - wgrid[nd]->

nghost;

287 count[nd] = wgrid[nd]->

np_int;

289 err = H5Sselect_hyperslab(dataspace, H5S_SELECT_SET,

290 start, stride, count, NULL);

292 for (nd = 0; nd <

DIMENSIONS; nd++) dimens[nd] = wgrid[nd]->np_int;

293 memspace = H5Screate_simple(rank,dimens,NULL);

299 for (nc = 0; nc < 3; nc++) {

300 dataset = H5Dcreate(group, cname[nc], H5T_NATIVE_FLOAT, dataspace, H5P_DEFAULT);

303 plist_id_mpiio = H5Pcreate(H5P_DATASET_XFER);

304 H5Pset_dxpl_mpio(plist_id_mpiio,H5FD_MPIO_COLLECTIVE);

305 err = H5Dwrite(dataset, H5T_NATIVE_FLOAT, memspace,

306 dataspace, plist_id_mpiio, cell_coords[nc][0][0]);

308 err = H5Dwrite(dataset, H5T_NATIVE_FLOAT, memspace,

309 dataspace, H5P_DEFAULT, cell_coords[nc][0][0]);

314 H5Pclose(plist_id_mpiio);

321 group = H5Gcreate(file_identifier,

"node_coords", 0);

323 for (nd = 0; nd <

DIMENSIONS; nd++) dimens[nd] = wgrid[nd]->np_int_glob+1;

325 dataspace = H5Screate_simple(rank, dimens, NULL);

329 start[nd] = wgrid[nd]->

beg - wgrid[nd]->

nghost;

331 count[nd] = wgrid[nd]->

np_int;

332 if (wgrid[nd]->

rbound != 0) count[nd] += 1;

335 err = H5Sselect_hyperslab(dataspace, H5S_SELECT_SET,

336 start, stride, count, NULL);

340 dimens[nd] = wgrid[nd]->

np_int;

341 if (wgrid[nd]->

rbound != 0) dimens[nd] += 1;

343 memspace = H5Screate_simple(rank,dimens,NULL);

349 for (nc = 0; nc < 3; nc++) {

350 dataset = H5Dcreate(group, cname[nc], H5T_NATIVE_FLOAT, dataspace, H5P_DEFAULT);

353 plist_id_mpiio = H5Pcreate(H5P_DATASET_XFER);

354 H5Pset_dxpl_mpio(plist_id_mpiio,H5FD_MPIO_COLLECTIVE);

355 err = H5Dwrite(dataset, H5T_NATIVE_FLOAT, memspace,

356 dataspace, plist_id_mpiio, node_coords[nc][0][0]);

358 err = H5Dwrite(dataset, H5T_NATIVE_FLOAT, memspace,

359 dataspace, H5P_DEFAULT, node_coords[nc][0][0]);

364 H5Pclose(plist_id_mpiio);

384 group = H5Gcreate(file_identifier,

"stag_vars", 0);

393 dimens[nd] = wgrid[nd]->

np_int_glob + (ns == (DIMENSIONS-1-nd));

395 dataspace = H5Screate_simple(rank, dimens, NULL);

399 start[nd] = wgrid[nd]->

beg - wgrid[nd]->

nghost;

401 count[nd] = wgrid[nd]->

np_int;

402 if (ns == DIMENSIONS-1-nd){

403 if (grid[ns].lbound != 0) count[nd] += 1;

407 err = H5Sselect_hyperslab(dataspace, H5S_SELECT_SET,

408 start, stride, count, NULL);

412 dimens[nd] = wgrid[nd]->

np_tot + (ns==(DIMENSIONS-1-nd));

414 memspace = H5Screate_simple(rank,dimens,NULL);

417 start[nd] = wgrid[nd]->

nghost;

419 count[nd] = wgrid[nd]->

np_int;

420 if (ns == (DIMENSIONS-1-nd) && grid[ns].lbound != 0) {

426 err = H5Sselect_hyperslab(memspace,H5S_SELECT_SET, start, stride, count, NULL);

429 dataset = H5Dcreate(group, output->

var_name[

NVAR+ns], H5T_NATIVE_DOUBLE,

430 dataspace, H5P_DEFAULT);

432 plist_id_mpiio = H5Pcreate(H5P_DATASET_XFER);

433 H5Pset_dxpl_mpio(plist_id_mpiio,H5FD_MPIO_COLLECTIVE);

434 err = H5Dwrite(dataset, H5T_NATIVE_DOUBLE, memspace, dataspace,

435 plist_id_mpiio, output->

V[

NVAR+ns][0][0]);

437 err = H5Dwrite(dataset, H5T_NATIVE_DOUBLE, memspace, dataspace,

438 H5P_DEFAULT, output->

V[

NVAR+ns][0][0]);

443 dataset = H5Dcreate(group, output->

var_name[

NVAR+ns], H5T_NATIVE_FLOAT,

444 dataspace, H5P_DEFAULT);

447 plist_id_mpiio = H5Pcreate(H5P_DATASET_XFER);

448 H5Pset_dxpl_mpio(plist_id_mpiio,H5FD_MPIO_COLLECTIVE);

449 err = H5Dwrite(dataset, H5T_NATIVE_FLOAT, memspace,

450 dataspace, plist_id_mpiio, Vpt);

452 err = H5Dwrite(dataset, H5T_NATIVE_FLOAT, memspace,

453 dataspace, H5P_DEFAULT, Vpt);

459 H5Pclose(plist_id_mpiio);

467 H5Fclose(file_identifier);

477 sprintf(xmfext,

"dbl.xmf");

480 sprintf(xmfext,

"flt.xmf");

484 sprintf (filenamexmf,

"%s/data.%04d.%s", output->

dir, output->

nfile, xmfext);

486 fxmf = fopen(filenamexmf,

"w");

487 fprintf(fxmf,

"<?xml version=\"1.0\" ?>\n");

488 fprintf(fxmf,

"<!DOCTYPE Xdmf SYSTEM \"Xdmf.dtd\" []>\n");

489 fprintf(fxmf,

"<Xdmf Version=\"2.0\">\n");

490 fprintf(fxmf,

" <Domain>\n");

491 fprintf(fxmf,

" <Grid Name=\"node_mesh\" GridType=\"Uniform\">\n");

492 fprintf(fxmf,

" <Time Value=\"%12.6e\"/>\n",

g_time);

494 fprintf(fxmf,

" <Topology TopologyType=\"2DSMesh\" NumberOfElements=\"%d %d\"/>\n",

495 wgrid[0]->np_int_glob+1, wgrid[1]->np_int_glob+1);

496 fprintf(fxmf,

" <Geometry GeometryType=\"X_Y\">\n");

498 fprintf(fxmf,

" <DataItem Dimensions=\"%d %d\" NumberType=\"Float\" Precision=\"4\" Format=\"HDF\">\n",

499 wgrid[0]->np_int_glob+1, wgrid[1]->np_int_glob+1 );

500 fprintf(fxmf,

" %s:/node_coords/%s\n",filename,cname[nd]);

501 fprintf(fxmf,

" </DataItem>\n");

503 #elif DIMENSIONS == 3

504 fprintf(fxmf,

" <Topology TopologyType=\"3DSMesh\" NumberOfElements=\"%d %d %d\"/>\n",

505 wgrid[0]->np_int_glob+1, wgrid[1]->np_int_glob+1, wgrid[2]->np_int_glob+1);

506 fprintf(fxmf,

" <Geometry GeometryType=\"X_Y_Z\">\n");

508 fprintf(fxmf,

" <DataItem Dimensions=\"%d %d %d\" NumberType=\"Float\" Precision=\"4\" Format=\"HDF\">\n",

509 wgrid[0]->np_int_glob+1, wgrid[1]->np_int_glob+1, wgrid[2]->np_int_glob+1 );

510 fprintf(fxmf,

" %s:/node_coords/%s\n",filename,cname[nd]);

511 fprintf(fxmf,

" </DataItem>\n");

514 fprintf(fxmf,

" </Geometry>\n");

515 for (nv = 0; nv < output->

nvar; nv++) {

521 fprintf(fxmf,

" <Attribute Name=\"%s\" AttributeType=\"Scalar\" Center=\"Cell\">\n",

524 fprintf(fxmf,

" <DataItem Dimensions=\"%d %d\" NumberType=\"Float\" Precision=\"%d\" Format=\"HDF\">\n",

525 wgrid[0]->np_int_glob, wgrid[1]->np_int_glob, nprec);

526 #elif DIMENSIONS == 3

527 fprintf(fxmf,

" <DataItem Dimensions=\"%d %d %d\" NumberType=\"Float\" Precision=\"%d\" Format=\"HDF\">\n",

528 wgrid[0]->np_int_glob, wgrid[1]->np_int_glob, wgrid[2]->np_int_glob, nprec);

530 fprintf(fxmf,

" %s:/vars/%s\n",filename,output->

var_name[nv]);

531 fprintf(fxmf,

" </DataItem>\n");

532 fprintf(fxmf,

" </Attribute>\n");

535 fprintf(fxmf,

" <Attribute Name=\"%s\" AttributeType=\"Scalar\" Center=\"Cell\">\n",

538 fprintf(fxmf,

" <DataItem Dimensions=\"%d %d\" NumberType=\"Float\" Precision=\"4\" Format=\"HDF\">\n",

539 wgrid[0]->np_int_glob, wgrid[1]->np_int_glob);

540 #elif DIMENSIONS == 3

541 fprintf(fxmf,

" <DataItem Dimensions=\"%d %d %d\" NumberType=\"Float\" Precision=\"4\" Format=\"HDF\">\n",

542 wgrid[0]->np_int_glob, wgrid[1]->np_int_glob, wgrid[2]->np_int_glob);

544 fprintf(fxmf,

" %s:/cell_coords/%s\n",filename,cname[nd]);

545 fprintf(fxmf,

" </DataItem>\n");

546 fprintf(fxmf,

" </Attribute>\n");

548 fprintf(fxmf,

" </Grid>\n");

549 fprintf(fxmf,

" </Domain>\n");

550 fprintf(fxmf,

"</Xdmf>\n");

557 MPI_Barrier (MPI_COMM_WORLD);

560 print1 (

" [%5.2f sec]",difftime(tend,tbeg));

double *** V[64]

pointer to arrays being written - same for all

long int NX1

Number of interior zones in the X1 directions (boundaries excluded) for the local processor...

void print1(const char *fmt,...)

int nfile

current number being saved - one per output

int np_int_glob

Total number of points in the global domain (boundaries excluded).

int rbound

Same as lbound, but for the right edge of the grid.

char dir[256]

output directory name

float *** Convert_dbl2flt(double ***Vdbl, double unit, int swap_endian)

char ext[8]

output extension

long int NX2

Number of interior zones in the X2 directions (boundaries excluded) for the local processor...

int beg

Global start index for the local array.

#define ARRAY_4D(nx, ny, nz, nv, type)

int nghost

Number of ghost zones.

int * dump_var

select vars being written - one per output

D_EXPAND(tot/[n]=(double) grid[IDIR].np_int_glob;, tot/[n]=(double) grid[JDIR].np_int_glob;, tot/[n]=(double) grid[KDIR].np_int_glob;)

double g_time

The current integration time.

int type

output format (DBL, FLT, ...) - one per output

int * stag_var

centered or staggered variable - same for all

long int KBEG

Lower grid index of the computational domain in the the X3 direction for the local processor...

int np_tot

Total number of points in the local domain (boundaries included).

long int JBEG

Lower grid index of the computational domain in the the X2 direction for the local processor...

#define QUIT_PLUTO(e_code)

long int NX3

Number of interior zones in the X3 directions (boundaries excluded) for the local processor...

char ** var_name

variable names - same for all

long int IBEG

Lower grid index of the computational domain in the the X1 direction for the local processor...

int np_int

Total number of points in the local domain (boundaries excluded).